They’re on a mission to make new technologies friendlier for communities of all kinds — including and especially LGBTQIA+ people.

Hunter College Assistant Computer Science Professor Raj Korpan and his student team think queer communities must shape artificial intelligence, robotics, and other emerging technologies — or risk being misunderstood, misgendered, or even having their privacy invaded by machines built without such sensitivities.

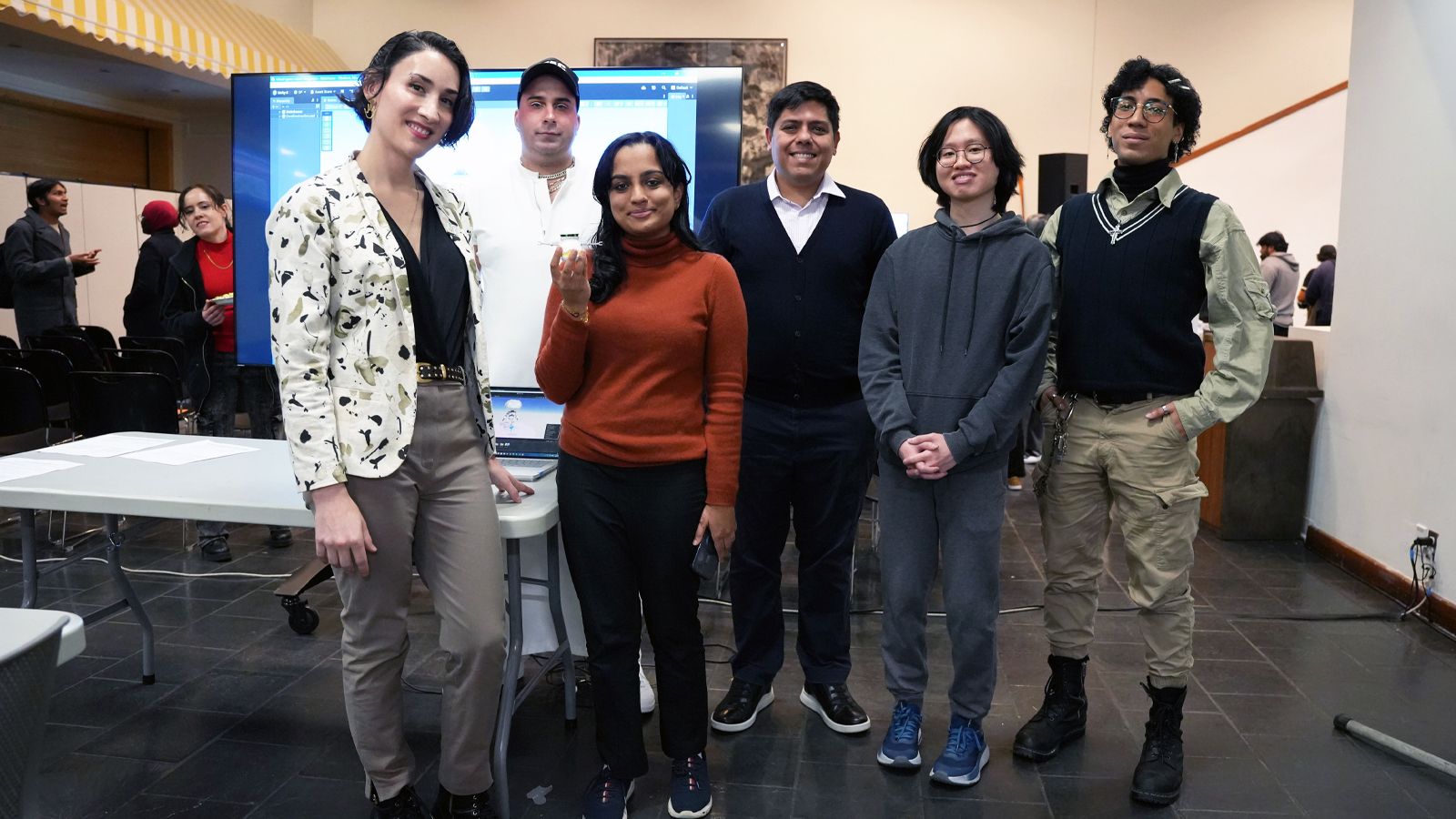

He and his team of five student scientists have created a queer-affirming robot companion, PAX, that they demonstrated at an event, “Beyond Boundaries: Creating AI and Robotics that Reflect Us All.” "Beyond Boundaries” was the third session of the “HUMANities xTech: Exploring the Future of AI and Tech Through Creative and Critical Lenses” series put on by the School of Arts & Sciences.

“We hope HUMANities x Tech will be one step in our commitment to equipping our students to be technologically fluent, ethically grounded thinkers prepared to shape the future of emerging technologies,” said Dean of Arts & Sciences Erica Chito Childs.

Korpan argues that technology reflects the biases of its makers, reinforcing dynamics that can put queer people at risk for discrimination. The large-scale algorithms used in recommendation systems, search engines, surveillance tools, and automated content moderation shape who is heard, who is believed, who is protected, and who is erased. That’s a crucial concern as AI-powered systems become omnipresent in modern life.

“These systems really do inherit inherent assumptions and world views, and they represent the people that create them, and the data that is generated from our society,” he said at the event. “We keep hearing the same message in our research with the LGBTQ+ community, with black women, with people who are disabled or neurodiverse — they keep telling us that they don't believe these systems were made for them.”

Korpan said that such experiences with technology disadvantage diverse users.

“When technology misgenders and stereotypes you, it erases your identity, and it creates more exclusion and mistrust,” he said. “We don't experience robots as just machines. We treat AI and robots as social beings, and we expect them to understand us and the nuance of our lived experience, and they don't. But we have an opportunity to change that, to build technology that honors us and the full spectrum of human lived experience.”

Korpan’s students — who span disciplines including computer science, Japanese, Asian American Studies, philosophy, and data visualization — spoke as a panel about the experiences that led them to robotics research and the insights they had gained from constructing the queer-friendly companion.

Raitah Jinnat, Jackie Yee, Keys Rigual, Alexandria Thylane Rohn, and Daniel Foulen discussed their experiences with inclusive or identity-aware robotics, highlighting the importance of community-led, inclusive design. They shared their roles in the lab, from prototype design to participatory design, and offered advice on getting into research, emphasizing genuine interest over prestige.

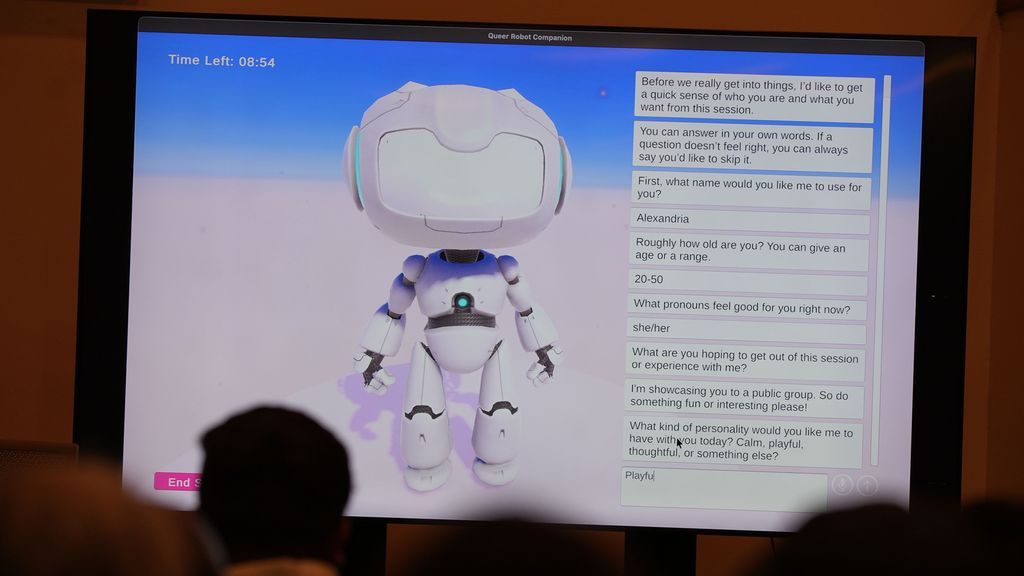

The group also discussed the challenges and strategies for creating inclusive robots, including gender-ambiguous voices and gender fluidity.

“I’m very surprised at the lens on humanity that something that seems so inhuman — robotics — has given me,” Jinnat said. “I think robotics is just another way to understand how humans work.”

An onscreen look at the robot companion created by Korpan’s team.

The event concluded with interactive activities for attendees to envision the future of inclusive, community-led robotics.

Korpan’s study is funded with a $5,000 seed grant from the CUNY Black, Race, and Ethnic Studies Collaboration Hub and involved survey research of 147 queer technology users and four focus groups.

Korpan is a co-founder of Queer in Robotics, an organization devoted to advancing queer representation in robotics.

He also opened a recent discussion at Hunter’s Roosevelt House Public Policy Institute on Care and Courage: Facing LGBTphobic Political Violence in Brazil, a new book by the Brazilian group VoteLGBT documenting violence against queer Brazilian political leaders in life but also online.

Korpan says algorithms can amplify hatred exponentially.

“Such systems can spread hate faster than communities can respond to it,” he said. “For example, AI often mislabels queer language as unsafe or forbidden, and it reinforces policing and surveillance that targets marginalized communities. When we talk about political violence, we can’t treat AI as something separate. AI is part of the infrastructure of power now.”

Still, Korpan feels optimistic that technologists such as himself can train AI to be a force for good: “Queer communities have always made technology into something else — something that can be liberatory, creative, protective, and joyful, even when it wasn't designed for us.”